C4Synth: Cross-Caption Cycle-Consistent Text-to-Image Synthesis

Winter Conference on Applications of Computer Vision (WACV), Hawaii, USA. 2019

Prologue

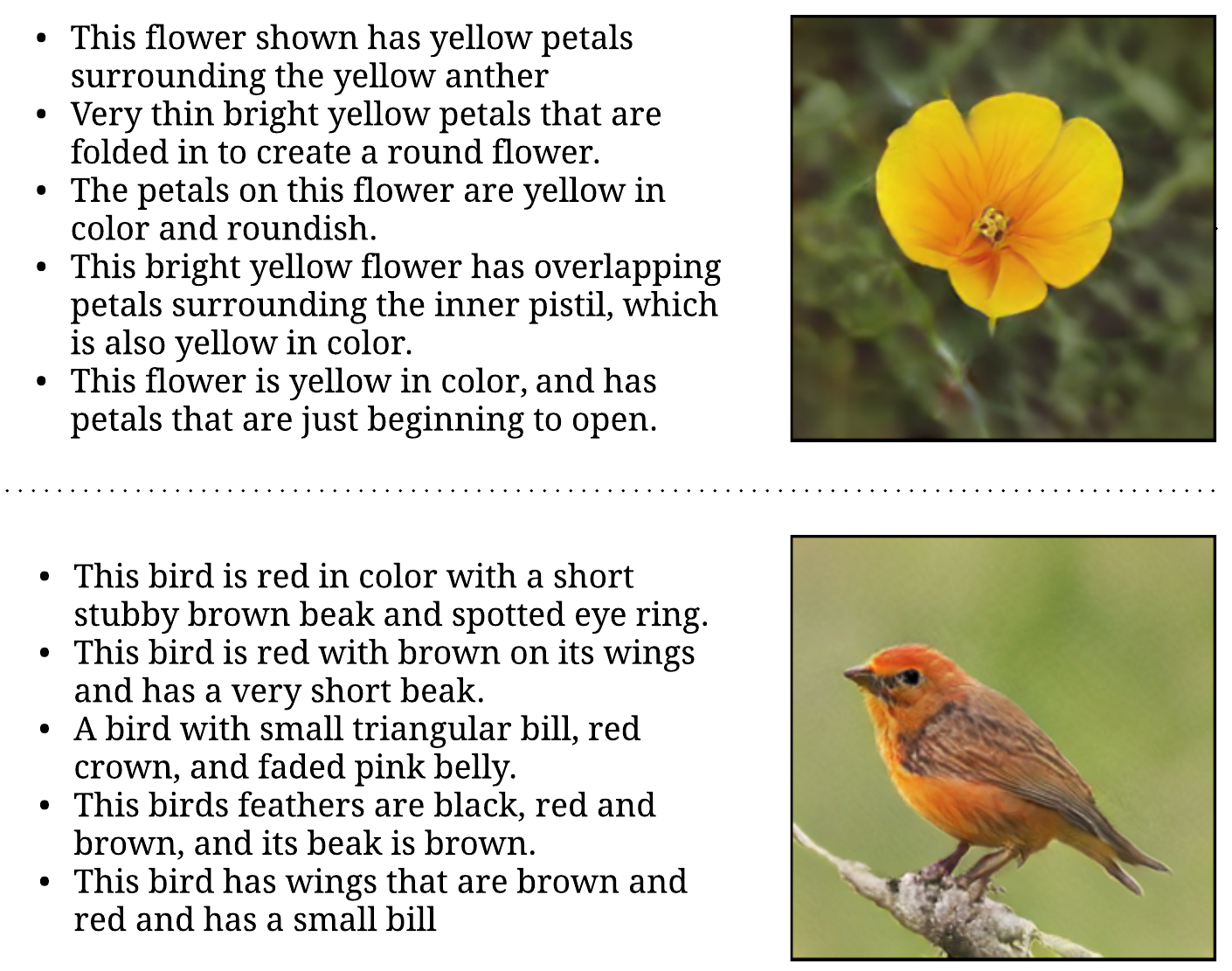

Generating an image from its description is a challenging task worth solving because of its numerous practical applications ranging from image editing to virtual reality. All existing methods use one single caption to generate a plausible image. A single caption by itself, can be limited, and may not be able to capture the variety of concepts and behavior that may be present in the image. We propose two deep generative models that generate an image by making use of multiple captions describing it. This is achieved by ensuring `Cross-Caption Cycle Consistency’ between the multiple captions and the generated image(s). We report quantitative and qualitative results on the standard Caltech-UCSD Birds (CUB) and Oxford-102 Flowers datasets to validate the efficacy of the proposed approach.

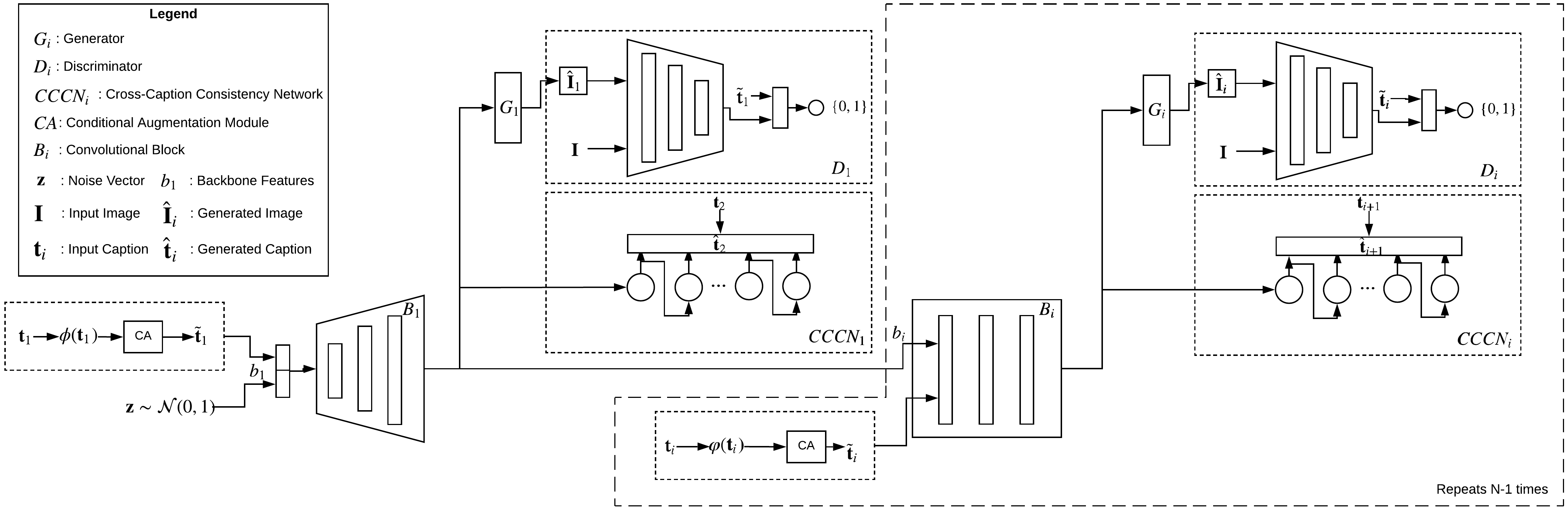

Cascaded Architecture

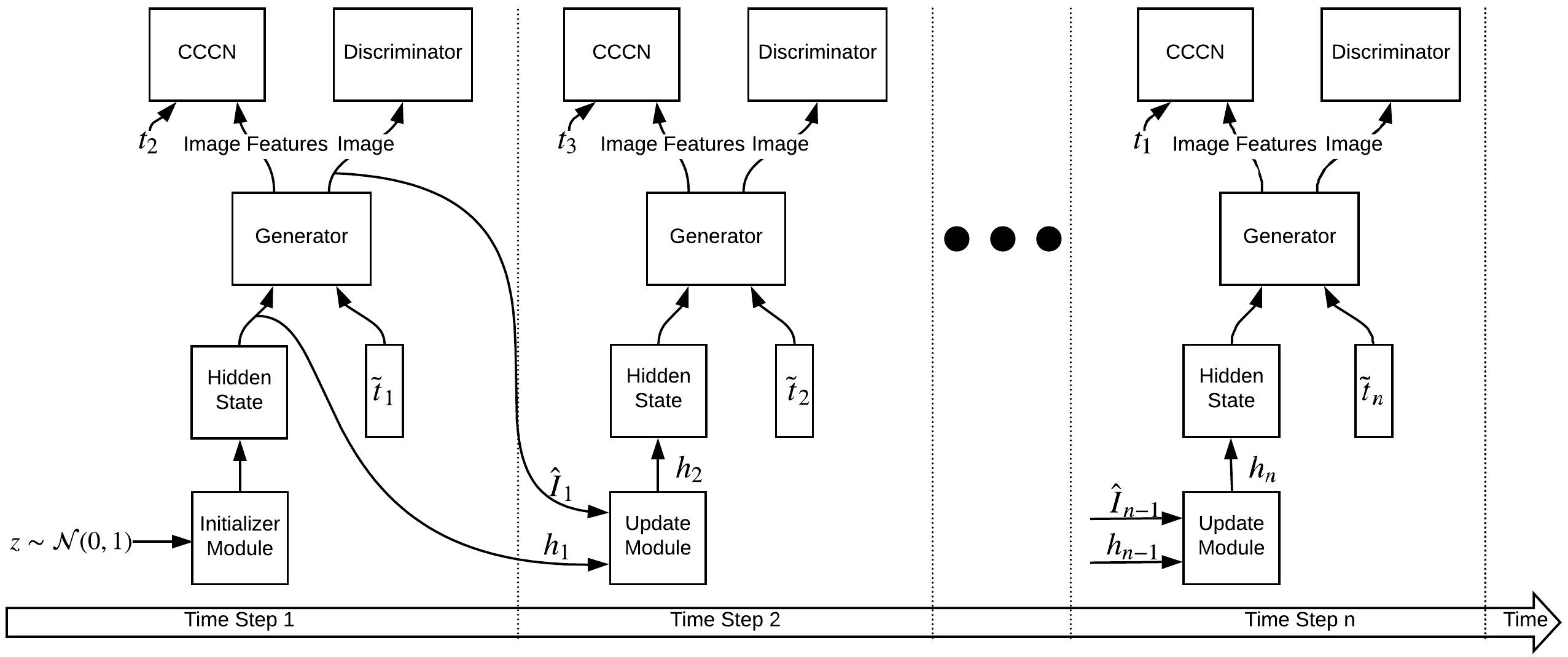

Recurrent Architecture

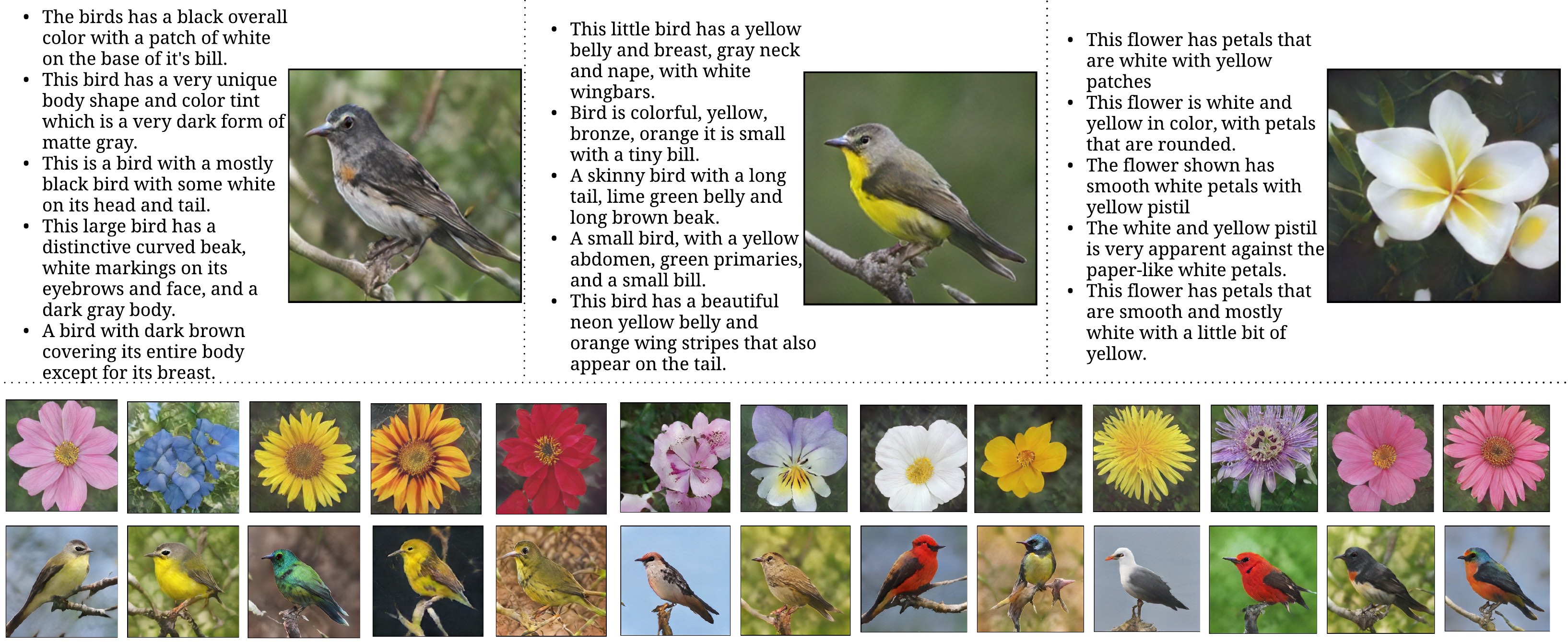

Results

Code

Code for Recurrent C4Synth: https://github.com/JosephKJ/aRTISt

Code for Cascaded C4Synth: https://github.com/JosephKJ/DistillGAN